What Grammarly can (and can’t) do for academics, authors, and businesses

Automated editing tools, human editors, and the three C’s

Grammarly, the automated editing tool for writers that detects errors in grammar, spelling, punctuation and more, has recently caught the attention of student writers as well as writing professionals, in part because of its aggressive advertising campaigns.

The hugely popular artificial intelligence-based technology appears on almost every online list of top editing tools, along with competitors such as CorrectEnglish, Ginger, Jetpack, Paperrater, ProWritingAid, and others.

Read about:

- How editing is a specialised skill

- The 3Cs of editing

- Grammarly’s Services

- How to use Grammarly’s editing tools

- Grammarly vs. a professional human editor

- Too long; didn’t read?

- Test 1: Business writing

- Test 2: Creative project

- Test 3: Academic writing

- Sorting the editorial wheat from the algorithmic chaff

- Grammarly best suits those who may need it least

- 5 reasons businesses should choose professional human editors over editing tools

- Is a human editor the ultimate upgrade

Editing is a specialized skill

As an editor at WordsRU, I have worked with scores of clients with various editing and proofreading needs. Through this work, and through my previous academic editing and writing work at several universities in the US and the UK, I have come to see editing as a specialised skill. It requires not just an eye for detail and a thorough understanding of language rules, but also an awareness of what might be called the three C’s: Context, Content, and Comment.

The 3Cs of editing

Context refers to the writer’s larger purposes or goals, the genre (or document type) that he or she is using, and also the way one part of a document relates to—provides context for understanding—another part.

Content refers both to what is being said in the document and to how it is being said, encompassing matters of substance as well as style.

Comment refers to the process of framing thoughts, suggestions, and questions for the writer, with a view to providing the best possible advice for revision.

Would a piece of software, however advanced, be able to take the three Cs into consideration? Can artificial intelligence replace me? I was more than a little curious and decided to test my skills against machine learning. To this end, I targeted Grammarly’s editing services, to assess ways in which tools like Grammarly might, and might not, be useful to our clients.

What Grammarly offers

I began by digging into the Grammarly website, where I found a breakdown of the three types, or levels, of service: Free, Premium, and Business. Grammarly’s free service for individuals, available for download when one registers on the site, offers ‘basic writing corrections’ in the form of correctness checks of grammar, spelling, and punctuation, with only limited checks for clarity, engagement, and delivery, or what might be better called presentation.

Check out what you can get for WordsRU’s Basic and Plus services.

Grammarly’s Premium and Business services

The Premium service offers ‘advanced writing feedback’ for individual users, while the business service offers ‘all Premium features for teams of 3 to 149’. These subscription-based services check for ‘consistency in spelling and punctuation’ and ‘fluency’ over and above the free service’s basic checks.

Only the Premium and Business levels of service offer plagiarism detection. This tool checks whether the writer has inadvertently used material that needs to be cited.

How to use Grammarly’s editing tools

You can access Grammarly’s editing tools through the online GrammarlyEditor and the Grammarly Desktop App, among other means.

The service allows you to upload, paste in, or manually type in passages or documents that you wish to have proofread for errors and edited for content as well as style.

The editing tool also:

- has an add-in for both Microsoft Word and Microsoft Outlook (more about the Word add-in in a moment)

- offers Google Docs support

- offers support for browser plugins

- hasa desktop app for both Windows and Mac OS

- has a native app and associated keyboard for iOS and Android devices.

Despite the many ways one could use the editing tool, Grammarly still has a way to go before it can satisfy Mac users. As of early March 2020, the Microsoft Word add-in was not available for Word for Mac, whichever plan you have—Free, Premium or Business. Grammarly is currently beta-testing an add-in for Microsoft Word for Mac. However, the beta version does not work on older versions of Word. If you are a Mac user this is a frustrating disadvantage. You can, however, use the desktop app, which I did to conduct my experiment to review Grammarly.

The Free service offers only basic editing suggestions, while the Premium and Business services offer ‘Basic + Advanced’ suggestions.

Grammarly regularly nudges users towards purchasing the subscription-based services through repeated reminders of the limitations of the free service.

As I sorted through the various features and options, my central questions were:

- How does all this translate into feedback on the writing of actual users?

- What kind of proofreading and editing advice would Grammarly give?

- How would the recommendations for revision compare with the suggestions that I, as an editor as well as a writer, might offer in its place?

With this in mind, I purchased a month-long Premium subscription to review Grammarly.

Grammarly versus a WordsRU editor

By way of experiment, I created three writing samples, one each for the three main categories of clients we interact with here at WordsRU: authors, academics, and businesses. Each passage was approximately 200 words long and varied in other factors directly related to the three Cs: that is the passages differed from each other in subject area, style, purpose, target audience etc.

I wrote two of the passages myself. They consisted of one paragraph from a creative project of mine and another paragraph from an academic project.

The third paragraph was taken from an editing/copywriting project that I worked on as a WordsRU editor. For this project, I edited, and expanded on text for a website that would be used to promote a client’s consulting business.

Grammarly’s editing tools are the same across the various platforms and interfaces. The different levels of service provide varying degrees of access to these tools. Hence, to make my experiment more comprehensive, I ran my three sample texts both through the Free and Premium services for individuals.

Too long; didn’t read?

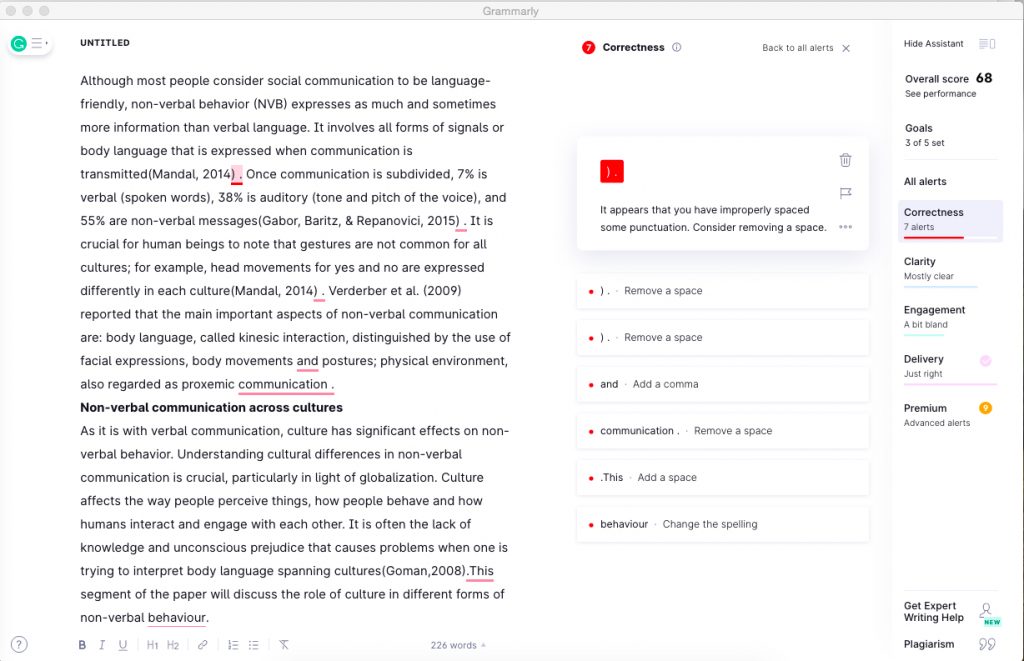

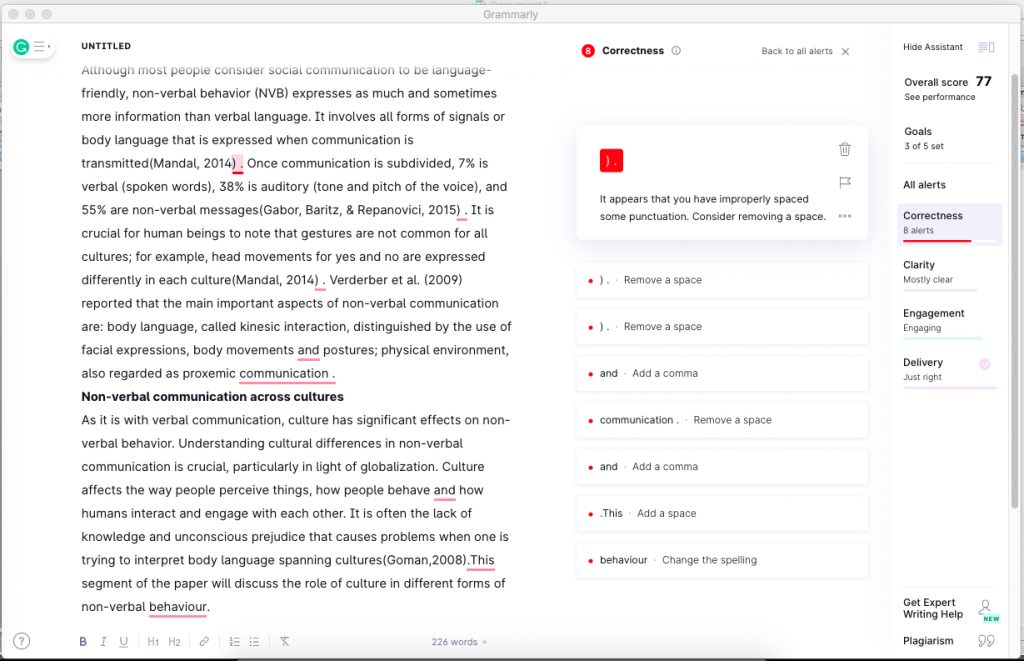

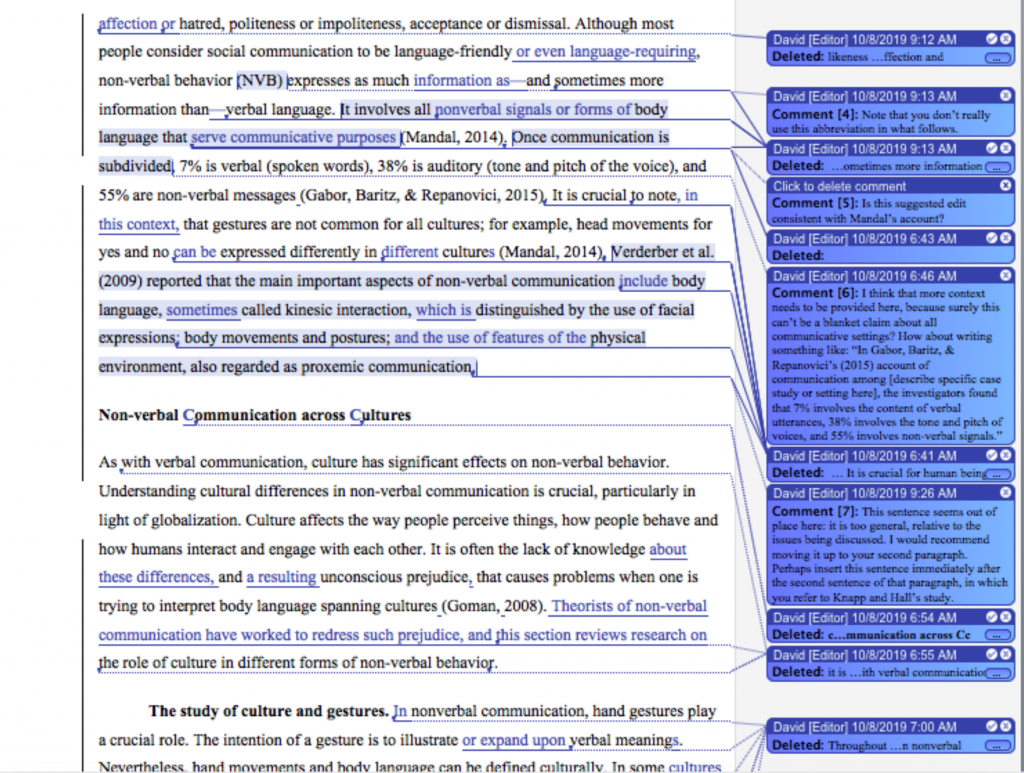

TLDR, the following screengrabs will give you a clear enough picture of what Grammarly can and can’t do for writers:

Screengrab 1: Grammarly’s Free service provides the following editing suggestions.

Screengrab 2: Grammarly’s Premium service provides the following editing suggestions.

Screengrab 3: A human editor’s edits of the same written passage.

Submit a job to WordsRU’s human editors.

Test 1: Business writing

Grammarly gives documents an overall score, somewhat like a grade on a paper written for school or university. What score your document receives depends in part on the ‘goals’ you have set for the editing tool. But to be able to toggle between all the available goals—‘general’ vs. ‘knowledgeable’ audience, ‘business’ vs. ‘academic’ subject domain, etc.—you have to purchase a subscription to the premium-level service.

According to the Free service, the lowest-scoring document of the three was the business-writing sample. This document received an overall rating of 85, both when I selected ‘general’ and when I selected ‘knowledgeable’ readers as my target audience.

The Free service identified only one ‘correctness’ issue: it flagged the word ‘own’, used in a construction along the lines of ‘teams that manage their own growth’. Suggesting that this word fell short of the standard of ‘clarity’, the system gave me this warning: ‘creates a tautology’ In flagging the phrase containing the word ‘own’, the system appeared to identify a non-issue: the phrase struck me as being neither unclear nor tautologous given its larger context.

Mixed messages

Also, since the system indicated that my document was ‘looking good’ in the area of ‘correctness’, ‘very engaging’ relative to the standard of ‘engagement’, ‘just right’ when it came to ‘delivery’ or presentation, and ‘mostly clear’ vis-à-vis the criterion of ‘clarity’, I wasn’t sure why the sample passage had received a B grade overall.

In the free service, users can’t choose between subject domains on the ‘goals’ page, so I wasn’t able, at this stage, to test whether Grammarly would score the document more generously relative to other business-related documents.

Would the Premium service shed any additional light on why the business document received a B grade? I ran the sample through the editing tool again, this time after upgrading my desktop app to the Premium version.

Grammarly’s Premium version offers a more elaborate reasoning for errors

Because of the upgrade, I could now select ‘business’ as the subject domain. Choosing this option, however, did not change the overall score. Nor did it change the favorable ratings the sample received in the areas of correctness, clarity, engagment, and presentation. What the Premium service did provide was a longer list of flagged items, or ‘alerts’. I inferred that this list of alerts, not provided by the F.ree service, is what accounted for the score of 85/100.

In addition to the construction involving ‘own’ that had been flagged before, eight other alerts now appeared:

- One, marked ‘engagement: vocabulary’, suggested that the word ‘greater’ is sometimes overused, and suggested ‘more significant’ as a synonym.

- Two of the other alerts concerned constructions featuring a passive verb.

- The remaining five called for sentences to be rewritten.

Similar to the flagging of the phrase containing ‘own’, all of these additional alerts struck me as being false positives.

On analysis, I found that ‘greater’ had not been used more than once in the sample document, and the passive constructions were there for reasons of emphasis.

In addition, in the case of the flagged sentences, the system apparently failed to pick up that these parts of the sample were bulleted items arranged in a list—a format that is particularly effective for web pages.

The verdict on Grammarly’s use for business documents

In summary, in the feedback it offered on the business sample, Grammarly demonstrated impressive technical knowledge of grammar, but fell short when it came to the three Cs—Context, Content, and Comment.

The tool proved itself to be adept at picking out grammatical and stylistic features, such as passive verbs and clauses lacking a main verb, that may be problematic in some contexts. But it appears to lack the more global contextual knowledge concerning genre, format, and purpose that a human editor brings to the table, or keyboard.

Such contextual knowledge allows for true positives, or genuine problem spots, to be distinguished from false positives when it comes to grammar checking, proofreading, and editing.

In other words, awareness of context shapes judgments about content. In turn, judgments about content determine what sorts of comments are likely to be helpful and productive, in connection with any given portion of a document.

But how would Grammarly fare with the other two test documents?

Find out how WordsRU can help your business.

Test 2: Creative project

The editing tool assigned the second lowest grade of 93 to the creative project. In testing how this sample would be treated by the Free service, I switched the target audience from ‘knowledgeable’ to ‘general’, and the score declined from 93 to 89. One additional alert, in the category of ‘hard-to-read text’, appeared when I made this switch. But the system once more nudged me to upgrade to the Premium-level service to get more details about these issues.

Here the system seemed to be picking up on challenges posed by my creative project, which combines ideas from philosophy and other fields with fictional writing. Again, however, a human editor, because of global contextual knowledge, would be able to offer more targeted editorial advice. It takes a human editor to distinguish between passages that are needlessly hard to read, on the one hand, and passages that ask readers to interpret them in light of a larger aesthetic design, on the other hand.

Interestingly, when I ran this same creative sample through the Premium service, with the default goals still in place, the score rose from 93 to 94. As with the Free service, though, the score declined to 89 when I selected a general vs. knowledgeable target audience. When I toggled from ‘general’ to ‘creative’ as the subject domain, the score again rose to 94, even for general readers.

The premium-level service again provided more information about potential writing issues than the Free service had identified. In this case, the system went into detail about the five alerts mentioned in the feedback provided by the free service.

Under the category of ‘engagement: variety’, the system provided alerts for a couple of words that were repeated in the sample, and it also underlined two longer sentences, suggesting that they be shortened by ‘removing any unnecessary words’ or splitting them up.

In my dual role as editor and author, I readily admit that one of the verbal repetitions flagged by the system was inadvertent. But, I balked at the suggestion that the word ‘unwavering’ in the phrase ‘unwavering commitment’ exemplified hard-to-read writing for the general reader, whether in creative or in other contexts.

Likewise, one of the instances of repetition was strategic, and the system’s blanket prohibition of passive constructions again struck me as too sweeping. Both of the longer sentences flagged by the system, I noticed, contained dashes. Had the system developed, perhaps because of the data set on which it had been trained, a bias against sentences featuring dashes?

Grammarly has trouble sorting the editorial wheat from the algorithmic chaff

Overall, then, the second test produced results similar to those produced by the first. The system picked up particular grammatical and stylistic features that, in some contexts, can indeed be problematic. However, it could not formulate the more global judgments about contextual appropriateness that are a speciality of human editors.

In consequence, the tool had trouble sorting the editorial wheat from the algorithmic chaff. It did not draw clear enough distinctions between true positives, or real writing issues, and false positives, or non-issues given the larger context.

Check out WordsRU’s services for authors.

Test 3: Academic writing

Finally, in the third test, the Free service assigned the academic document an overall score of 97. This sample received a correctness rating of ‘looking good’, a clarity rating of ‘very clear’, an engagement rating of ‘engaging’, and a delivery/presentation rating of ‘just right’.

When I adjusted the target audience from knowledgeable to general, the score went down from 97 to 86, as might have been expected with an academic sample.

Even with the audience set to knowledgeable, the system flagged four ‘additional writing issues’—all in the category of ‘hard-to-read-text’. The alerts indicated:

- two word-choice issue

- a ‘faulty tense sequence’

- an issue involving ‘punctuation in a compound/complex sentence’.

When I used the Premium service to dive down into the details about the feedback on the academic sample, once again I found myself inclined to label as false positives the writing issues that had been called out by the system.

The punctuation issue involved adding a superfluous comma, and the purported faulty tense sequence stemmed from another misreading of context, therefore providing an incorrect suggestion.

In context, the present perfect tense, not the past perfect tense recommended by the editing tool, was appropriate. The two-word choice issues concerned a word that, because it named the central topic of the document, bore repeating.

In the Premium service, fine-tuning the subject domain by switching from ‘general’ to ‘academic’, the assigned score went up from 97 to 99, and the two word-choice items disappeared from the list of writing issues. The reason for this change may be that the system made a greater allowance for restatements of key topics in academic writing as compared with general writing. But the tense error, along with the superfluous comma, still appeared in the Premium service’s list of issues.

This third sample supported my main conclusions in my review of this top-ranked editing tool. Grammarly, though skilled at finding potential problems with sentences, phrases, and words, lacks the global contextual knowledge—about genre and purpose, about topical relevance, about consistency of phrasing—that human editors are able to leverage.

As a result, even when users exploit the fine-tuning made possible by the Premium service the system is likely to throw up false positives.

See how WordsRU can help academics and students get their best draft.

Grammarly best suits those who may need it least

Paradoxically, then, the editing tool may be best suited for those who need it least, because only a user with editing skills will be able to recognise which of the issues flagged by the system are, in fact, non-issues. But in that case, the user’s editing skills are such that they don’t require support from an automated editing tool.

Five points of difference between automated and human editors

The conclusions just outlined can be distilled down even further—into five points of difference between automated and human editors for authors, academics, and businesses.

In each case, the points of difference turn on human editors’ facility with the three C’s, or Context, Content, and Comment.

Given the current state of the art, it is difficult to capture this facility in the form of algorithms, or to generate it automatically through bottom-up processes of machine learning.

5 reasons why businesses should choose human editors over Grammarly and other editing tools

- Better recognition of the formats most suitable for online versus in-person marketing channels

- A better understanding of the styles, and kinds of organisation, most appropriate for different types of business documents (proposals vs. marketing plans vs. reports for investors)

- Better recognition of how unusual words or turns of phrase, rather than being errors, can play a role in advertising campaigns

- A keener sense of the distinctions among different groups of customers or clients being targeted by a report, website, or other document

- A better understanding of the specific kinds of feedback and writing advice that will be most useful in business-writing contexts.

WordsRU offers editing, proofreading and copywriting services for businesses.

5 reasons academics and students should choose human editors over Grammarly and other editing tools

- Better recognition of the need for nuance and precision in academic argument

- A better understanding of the styles, and modes of organisation, most appropriate for different kinds of academic writing (abstracts or grant proposals vs. articles or thesis chapters)

- Better recognition of terms and phrases that, rather than being errors, constitute neologisms or other specialised vocabulary items in technical disciplines

- A keener sense of the larger dialogues within fields and subdisciplines, and how academic documents need to participate in them

- A better understanding of the kinds and amount of writing advice that will be most productive in academic contexts.

WordsRU can help your work meet the highest academic standards.

5 reasons authors should choose human editors over Grammarly and other editing tools

- A better understanding of how word-choice, phrasing, paragraph structure, and other features of creative work fit within authors’ larger aesthetic aim

- A better understanding of the styles, and modes of organisation, most appropriate for different kinds of creative projects (memoir vs. creative nonfiction vs. fiction)

- Better recognition of how the different parts of documents shape and also complement one another, as with narration vs. dialogue

- A better understanding of authors’ specific goals with respect to scene-setting, characterisation, plotting, and so forth

WordsRU can assist you in any stage of your writing process.

Is a human editor the ultimate upgrade?

I noted, with great interest, that Grammarly offers ‘Expert Writing Help’, a new service that allows Premium subscribers the services of a human proofreader.

What does it mean for the providers of an automated editing tool to offer this escape hatch into the realm of human editing? In calling attention to this feature, am I just displaying predictable defensiveness in the face of an AI-driven service that might one day replace me? Or does the existence of this feature, rather, confirm what I concluded based on the results of my experiment?

As I see it, the feature points to the irreplaceable contextual knowledge on which we human editors draw, in order to separate the wheat of constructive feedback from the chaff of an overscrupulous search for errors.

Read WordsRU’s reviews. Our clients have given us 4.93/5 stars.

Get a free sample edit to get the WordsRU advantage.